One of the questions I’m interested in is how changes in the technology available to create science visualizations have affected the final products. While there are obvious differences related to the final display media- like paper and ink vs. monitors and pixels- other “hidden” technologies also play a part in creating visualizations.

Let’s look at the case of visualizations for which you need to do some sort of math in order to create. These include graphs and charts, but also less obviously number-based visualizations, like those showing the relationships among species.

Essentially, we base our understanding of the relationships among species upon how similar they are. Today, this means classifying many traits of different species, creating a table of the different types of traits each species has, and determining what percentage of traits they share in common.* Then we compare the percent similarity among each species, and use that information to construct phylogenetic trees- visualizations of the relationships among the species.

Clearly, some mathematical calculations need to be done here. And the more traits and species you are trying to deal with, the more complex the calculations become. Today’s biologists use computers- and even supercomputers- to help them crunch all the numbers they need to be crunched. Once that step is done, other computer programs help them construct the phylogenetic trees.

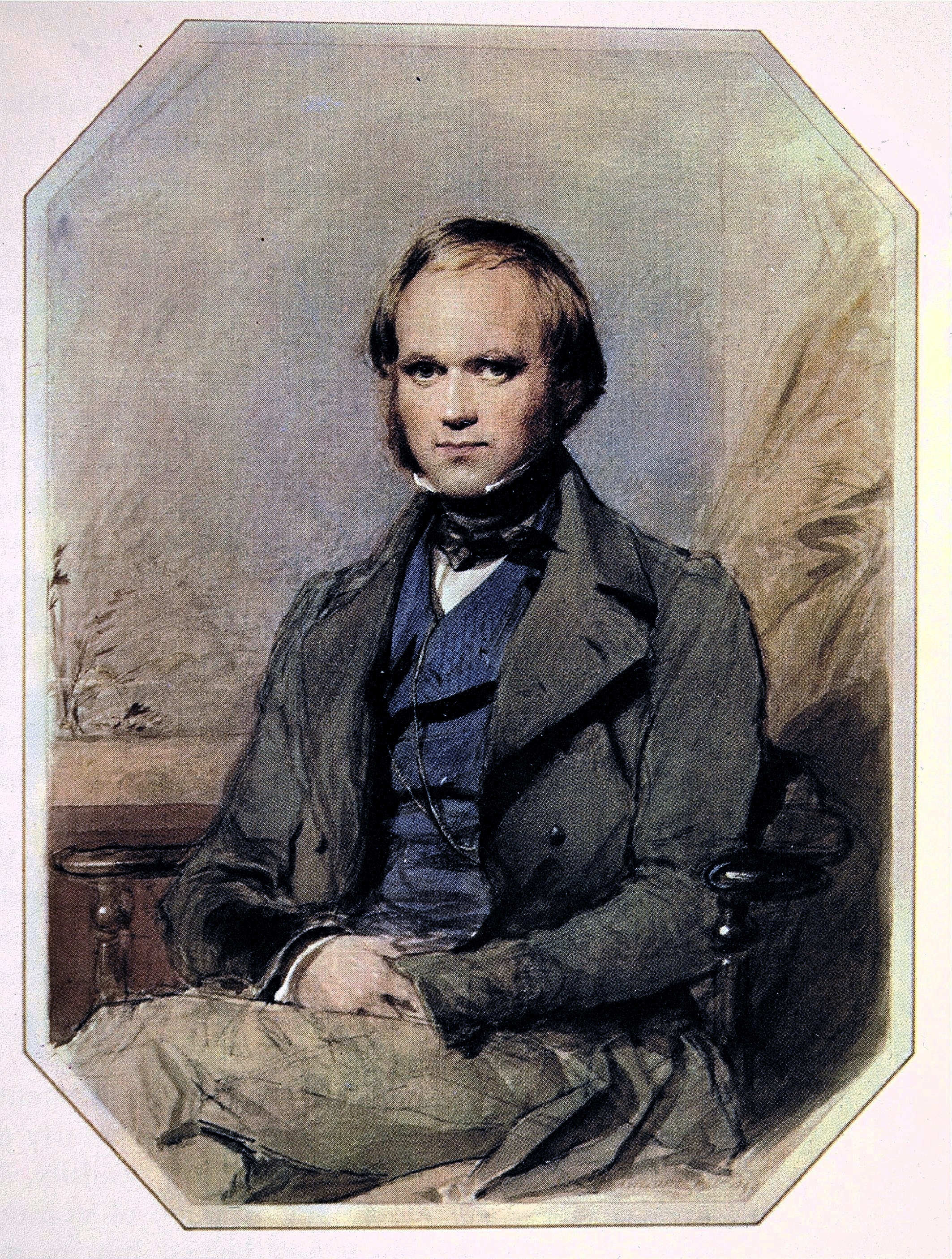

So, let’s step back in time to the early days of evolutionary biology. In Darwin’s day, this mathematical approach to evolutionary biology didn’t exist. Species were classified in a more qualitative way, but one that was still based upon similarities and differences. This approach relied more on the judgement of the individual scientist in determining which species were most closely related, rather than a compilation of percentages. The visualizations that resulted relied less on math, and more on individual judgment and traditional conventions of design.

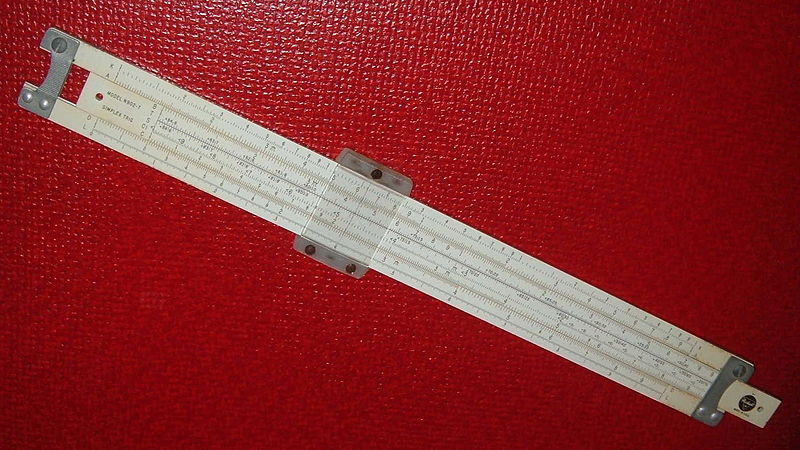

But does this mean that early biologists didn’t use math when creating visualizations? Not at all. Most probably used math at least in some amount to help them figure out relationships between species. And (to get a bit off-topic) they also used complex math for other aspects of biology, such as determining population densities. To help them in this task, they actually had a fair array of tools, such as logarithmic tables, Napier’s bones, slide rules, and possibly even mechanical calculators.**

But were these tools really widespread among biologists? And did the introduction of more powerful calculating devices help spur the change in biology that led to the number-heavy world of today? I suspect the answer to the latter question is yes.

So, getting at the title of this post, did Darwin use a slide rule? My (admittedly limited, so far) research hasn’t been able to turn up any examples of this. However, I did find an autobiography of his fellow biologist, Alfred Russel Wallace, that talks about using a slide rule as a boy:

“My brother had one of these rules, which we found very useful in testing the areas of fields, which at that time we obtained by calculating the triangles into which each field was divided. To check these calculations we used the slide-rule, which at once showed if there were any error of importance in the result. This interested me, and I became expert in its use, and it also led me to the comprehension of the nature of logarithms, and of their use in various calculations.” (From Wallace, A. R. 1905. My life: A record of events and opinions. London: Chapman and Hall. Volume 1. via Charles Darwin Online)

If Wallace used a slide rule, it’s reasonable to think Darwin might have too. And early visualizations of evolution probably did use tools other than pens, ink, and straight brainpower.

* I’m oversimplifying this a bit, because another important thing to consider is whether the traits are based on shared ancestry, rather than convergent evolution, and some other factors. Because of these factors, some of the traits in your table are more important than others, so are “weighted” more heavily in your calculations.

** Charles Babbage of Difference Engine fame was a contemporary of Darwin’s, and they even corresponded about his calculating devices.